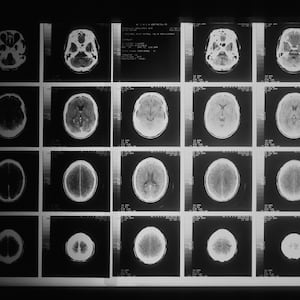

People who have been robbed of their ability to talk to others due to paralysis caused by conditions like stroke or ALS no longer seem destined to permanent silence. This is thanks to implants that read the brain’s electrical impulses and translate them into thoughts that can be communicated to those on the outside.

A pair of new studies published in Nature on Wednesday have moved us forward in this endeavor in very groundbreaking ways. A Stanford University team successfully demonstrated the use of a AI-powered brain implant that can read brain signals and translate them into speech at an average rate of 62 words per minute—nearly 3.5 times faster than previous records set by similar implants.

The second study, run by the University of California, San Francisco’s Chang Lab, is arguably more exciting. The lab announced the demonstration of a brain implant that can, for the first time ever, synthesize spoken words and facial expression directly from brain signals. The technology was shown to give back speech to Ann Johnson, a 48-year-old teacher who previously suffered a stroke that left her paralyzed and unable to talk. The new device now allows her to talk to others through a digital avatar that speaks and exhibits facial expressions that accurately reflect her thoughts.

“Our goal is to restore a full, embodied way of communicating, which is really the most natural way for us to talk with others,” Edward Chang, a UCSF neuroscientist who leads the Chang Lab, said in a statement. “These advancements bring us much closer to making this a real solution for patients.”

The Stanford team’s device builds upon previous work to produce written speech from brain signals—it collects neural activity from brain cells, and uses an AI to decode those signals as they pertain to communication. In its demonstration, the device’s error rate was 9.1 percent (nearly three times less than previous devices), based on a 50-word vocabulary.

But it’s the Chang Lab’s work that really pushes the forefront of brain implant technologies. The implant measures brain signals through electrodes that sit on the head and measure brain cell activity. The brain-to-text function achieved 78 words per minute, with a mere 4.9 percent error rate from a 50-phrase set.

The marquee feature, however, is the brain-to-speech function. Though the error rate for this is much higher—25 percent for a 1,000-word vocabulary, and 28 percent error rate for a 39,000-word vocabulary—it’s still a remarkable achievement to turn brain signals into audible speech. And the facial movements and non-verbal expressions translated through the visual avatar proved to be quite accurate as well.

The accuracy, speed and vocabulary are crucial,” UCSF researcher and study co-author Sean Metzger said in a statement. “It’s what gives a user the potential, in time, to communicate almost as fast as we do, and to have much more naturalistic and normal conversations.”

The main next steps for Chang and his team are to see this device get FDA approval so more patients with anarthria—the medical term for loss of speech—can take advantage of it; as well as the development of a wireless version that can help patients feel more unencumbered and free.