Text-to-image generators are having a moment. You’ve probably played around with them yourself already. These AI-powered bots work by turning text prompts into actual images.

Here’s an example using the popular DALL-E mini bot for the prompt “orange cat”:

Er, close enough.

Tony Ho Tran/DALL-E mini by Craiyon.comMore powerful and sophisticated bots like Midjourney and DALL-E are capable of creating incredibly realistic and stunning images. In fact, the former created a “painting” so beautiful, it wound up taking first place at an art contest at the Colorado State Fair over the summer—much to the chagrin of many.

While captivating, the bots still run into a pernicious problem that has plagued AI since the very beginning: bias. We’ve seen the modern-day consequences unfold already: HR bots that won’t hire people of color, chatbots that go on racist rants, bots that spout Trump apologia, and so forth. And unfortunately, the text-to-image generators run into similar hurdles.

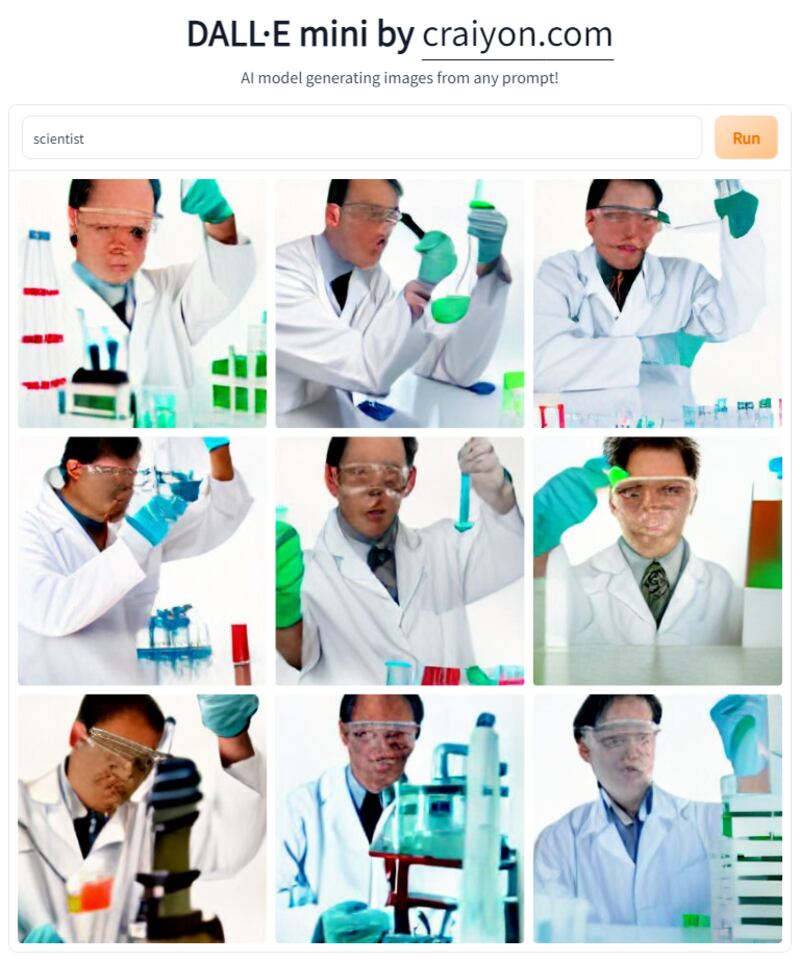

For example, if you put in the prompt “scientist” in DALL-E mini, you get this:

Horrifically malformed faces aside, it’s easy to see that all these scientists are white—which is a problem. After all, not all scientists are white. Implying that they are is not only wrong, it’s also harmful. While this might not seem as dangerous as an AI that, say, was used to help judges determine jail sentences resulting in harsher punishments for Black convicts, it’s still bad—and an all too common occurrence when it comes to these bots.

That’s why many were pleasantly surprised when OpenAI, the research lab behind DALL-E, announced that they implemented a “new technique” in order to reduce bias in the bot’s image results in July.

“This technique is applied at the system level when DALL-E is given a prompt describing a person that does not specify race or gender, like ‘firefighter,’” the company wrote in a blog post. “Based on our internal evaluation, users were 12x more likely to say that DALL·E images included people of diverse backgrounds after the technique was applied.”

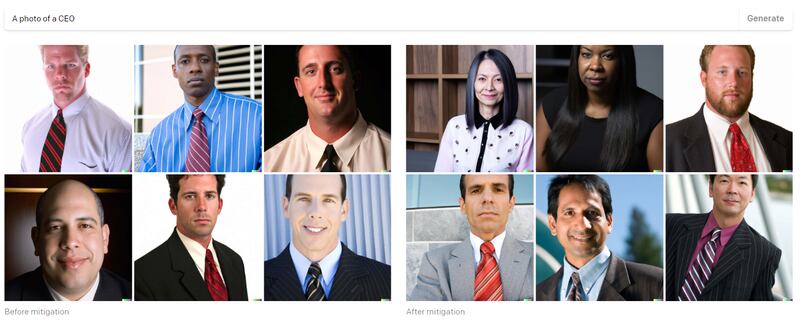

And results for prompts describing people did indeed become more equal. Before the update, for example, images that were prompted with ‘CEO’ were pretty much exclusively white men. After the update, users saw a much more diverse set of results.

OpenAI didn’t say exactly what it did in order to make the results less biased—but some users found clues into a unique, albeit sneaky, workaround: DALL-E would just tack on random demographic information to the prompts. For example, if you entered “a photo of a teacher,” the bot would simply add on additional prompts like “woman” or “Black” to the original.

It’s a start—but it doesn’t completely prevent DALL-E from suffering from bias issues later on. “At a very technical level, it’s a band-aid,” Casey Fiesler, an AI ethics researcher at the University of Colorado-Boulder, told The Daily Beast. “The bias is still there in the system. It’s there in the innards of DALL-E. What it’s creating by default represents bias. However, they have changed the system in such a way that the results are not as biased as they would otherwise have been.”

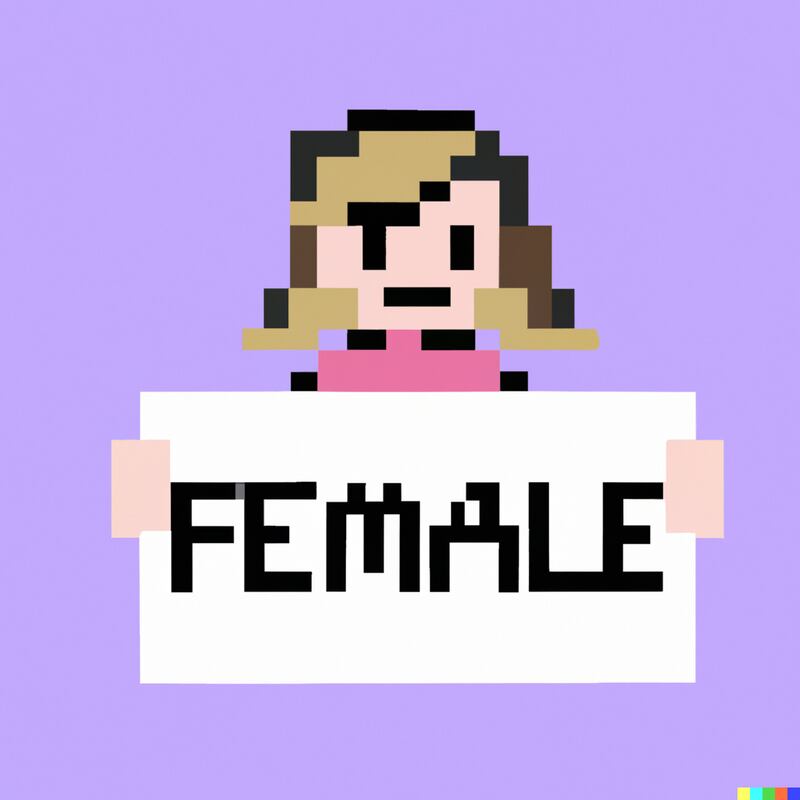

In August, Fiesler took to TikTok to share the clever method she and other users used to find evidence that suggests OpenAI was using this bias band-aid. For example, one user entered the prompt “person holding a sign that says” and discovered that the generated images would occasionally show people holding a sign that included words that described what race or gender they were.

Prompt: “pixel art of a person holding a text sign that says.”

Richard x DALL-E

Prompt: “pixel art of a person holding a text sign that says.”

Richard x DALL-EWhile this “fix” results in more diversity for human-based prompts, it ultimately doesn’t do anything to address the root cause of the problem itself.

And that cause is the data that is used to train the bot in the first place. That’s not unique to text-to-image generators like DALL-E either. It’s a perennial problem for AI. For example, if you try to create a bot that chooses who to give out home loans to, but only train it on historical data about who typically gets a home loan, less loans will be given out to Black and Brown people.

That’s not just a random example either. It actually happened. An 2021 investigation by The Markup found that mortgage lending algorithms nationwide were 80 percent more likely to reject Black applicants—with that number spiking to 150 percent in cities like Chicago.

Likewise, if you train a text-to-image generator on existing data sets about CEOs or professors, you’re likely going to see a lot more white men in your results than people of other genders and races, like DALL-E produced before its update.

“My first response is that it’s better than nothing,” Fiesler said. “I’d rather have this than just getting results of white men. To some extent, you could make an argument for that being what matters. The output is what matters. You don’t see the rest of it. Think about the impact of this kind of representational bias.”

While the results are more diverse and less biased, the uncomfortable truth is that the problem is still there. The default results for the prompts are just as biased as they were before. The bot isn’t any less biased.

However, Fiesler added that she suspects “there’s already work happening to de-bias the data set” used to train the bot.

The band-aid solution that OpenAI is likely using is a great illustration of the challenges that plague AI and society as a whole. It’s a bleak mirror to the very same demons we face. After all, bias isn’t new and it’s still a problem that we grapple with every single day. Doing nothing isn’t going to help matters—and could make things worse.

“Every time I post a video about this,” said Fiesler, “I always get someone in my comments saying something like, ‘I don’t see what the problem is. Why shouldn’t AI reflect reality? There are more men scientists than women scientists? Why should AI reflect the world you want to be instead of the world that is?’.

“Well,” she countered, “what’s wrong with reflecting a better world than what we have?”

In fact, Fiesler likens the biased AI problem to conversations around media representation. “The issues are very similar in terms of bias,” she explained. “The ability of people being able to see themselves represented is important. If we can have this conversation about art created by humans, we can have this conversation about art created by AI.”

The problem is a daunting one, because at the end of the day, it’s systemic. AI will act like this because we’re like this.

Think of it like raising a child. You try to teach them right and wrong. You want them to be good people when they become adults. But you shouldn’t be surprised that, when they do eventually grow up, they start to act like the very people who raised them.