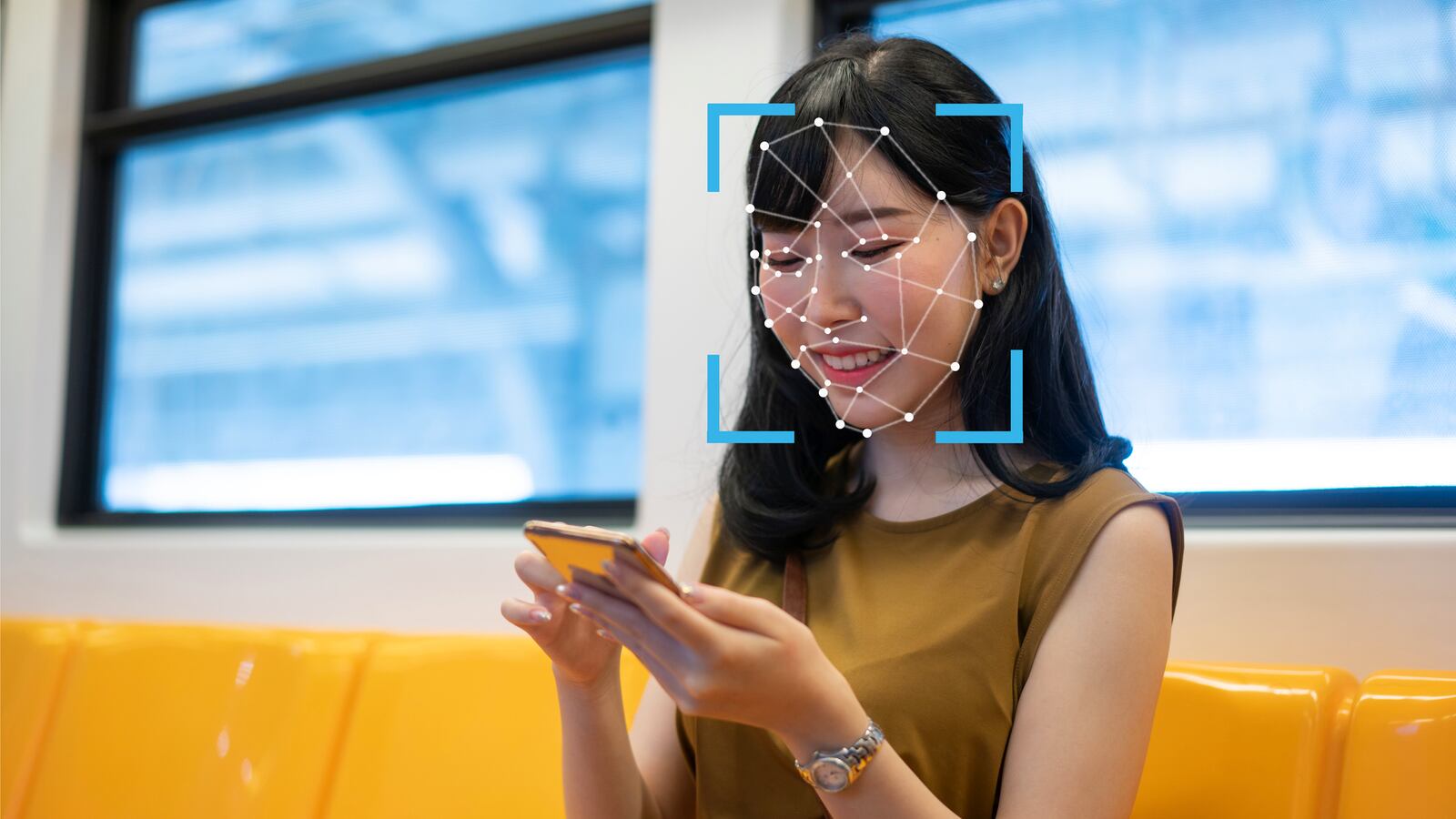

One common symptom that people with autism struggle with is the inability to interpret facial expressions. This can lead to difficulty in reading social cues in their personal lives, school, workplace, and even media like movies and TV shows. However, researchers at MIT have created an AI that helped shed light on why exactly this is.

A paper published on Wednesday in The Journal of Neuroscience unveiled research that found that neurotypical adults (those not displaying autistic characteristics) and adults with autism might have key differences in a region of their brain called the IT cortex. These differences could determine whether or not they can detect emotions via facial expressions.

“For visual behaviors, the study suggests that [the IT cortex] plays a strong role,” Kohitij Kar, a neuroscientist at MIT and author of the study, told The Daily Beast. “But it might not be the only region. Other regions like amygdala have been implicated strongly as well. But these studies illustrate how having good [AI models] of the brain will be key to identifying those regions as well.”

Kar’s neural network actually draws on a previous experiment conducted by other researchers. In that study, AI-generated pictures of faces that displayed different emotions ranging from fearful to happy were shown to autistic adults and neurotypical adults. The volunteers judged whether the faces were happy—with the autistic adults requiring a much clearer indication of happiness, e.g., bigger smiles, to report them as such when compared to the neurotypical participants.

Kar then fed the data from that experiment into an AI developed to approximately mimic the layers of the human brain’s visual processing system. At first, he found that the neural network was able to recognize the facial emotions about as well as the neurotypical participants. Then he stripped away the layers and retested them until he got to the last layer, which past research suggests roughly mimics the IT cortex. That’s when he found that the AI struggled to match the neurotypical adults, and more closely mimicked the autistic ones.

This suggests that this part of the brain, which sits near the end of the visual processing pipeline, could be responsible for facial recognition. This study could lay the groundwork for a better way to diagnose autism. Kar adds that it might help in the development of engaging media and educational tools for autistic children as well.

“Autistic kids sometimes rely heavily on visual cues for learning and instructions,” Kar explained. “Having an accurate model where you can feed in images, and the models tell you, ‘This will work best, and this won’t’ can be very useful for that purpose. Any visual content like movies, cartoons, and educational content can be optimized using such models to maximally communicate with, benefit, and nurture autistic individuals.”