The behind-the-scenes technological battle against political “deepfakes” is ramping up ahead of the 2020 election and there’s bad news for any trolls hoping to deploy the high quality and nearly undetectable video faker: Researchers say they’ve developed a new technique to potentially weed out the phony videos.

Advances in artificial intelligence have put the power to create such videos—which can convincingly depict someone doing and saying things they’ve never done or said—in the hands of virtually anyone able to find and use a breed of specialized software first created by pornography hobbyists to make fake celebrity smut. With the 2020 presidential campaign gearing up, election watchdogs worry that deepfakes will be deployed rampantly in covert propaganda efforts by foreign adversaries and domestic political actors, setting up a replay of Russia’s 2016 election interference—but with multiple adversaries and the volume cranked up to 11.

The potential reach of political video hoaxes was demonstrated vividly last month, when a New York man uploaded a low-tech “shallowfake” hoax video onto his politics-themed Facebook page and it spread like wildfire on social media. The video, which falsely depicted House Speaker Nancy Pelosi drunkenly slurring her words, chalked up four million views and dominated the national conversation for days, with Trump lawyer Rudy Giuliani among those to spread it.

Against that backdrop, the House Intelligence Committee is set to hold its first public hearing on deepfakes on Thursday—finally thrusting the issue onto a national stage. Behind the scenes, though, scientists have been working on developing technological measures to detect deepfake hoaxes.

Among the latest is a proposal by researchers Shruti Agarwal and Hany Farid to offer proactive protection for prominent politicians and heads of state.

In a paper entitled Protecting World Leaders Against Deep Fakes being presented Monday at the Conference on Computer Vision and Pattern Recognition in Long Beach, California, the scientists noted that people cycle through a sequence of facial expressions and head movements as they speak that are distinct to the speaker.

By capturing a speaker’s head rotation and the properties of 16 different “facial action units”— standardized movements like a nose wrinkle or a lowered eyebrow—a world leader’s subconscious ticks could be effectively fingerprinted from existing, authentic video, providing a template that an artificial intelligence engine could later use to spot deepfakes that don’t follow that precise facial-muscle script.

In an e-mail interview with The Daily Beast, Farid said the approach is already good enough to detect something like the deepfake of Mark Zuckerberg published on Facebook-owned Instagram this week by a pair of U.K. artists.

“We haven’t built a model for Zuckerberg, but in theory this is the type of fake that we could detect,” he wrote. “This fake, of course, is not very good,” he added, with a connoisseur's eye. “The audio is not very convincing and the lip-sync is not well synchronized.”

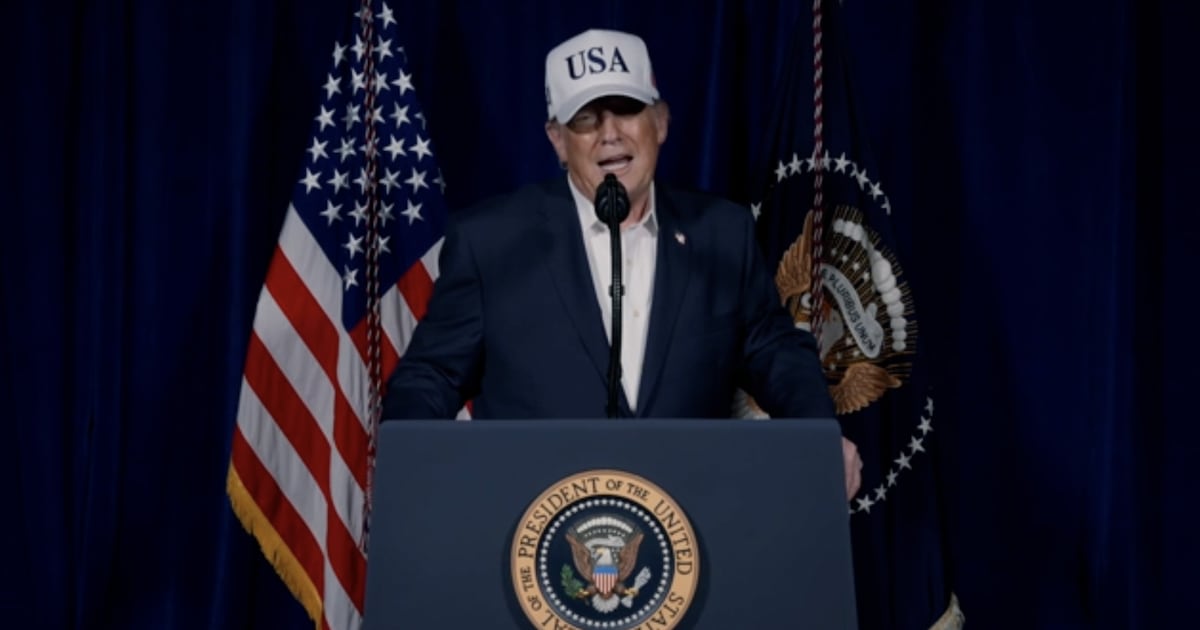

The researchers tested their thesis using video clips of Barack Obama, Hillary Clinton, Bernie Sanders, Donald Trump, and Elizabeth Warren, and determined their approach is more reliable than current forensic tools that look for pixel anomalies in the footage. But at the moment, their technique is easily thrown off by “different contexts in which the person is speaking,” according to their paper. So fingerprints captured from a formal address won’t reliably match the same person chit chatting in a casual setting. One proposed work-around to make the system practical: “Simply collect a larger and more diverse set of videos in a wide range of contexts,” they wrote.

In the long run though, Farid worries that the work being poured into solving the deepfake problem simply won’t be able to keep pace with bad actors bent on mischief.

“What worries me is that every few weeks we see a new advance on the synthesis side,” he wrote, “but not nearly as many advances on the detection side.”