Staten Island has a drug problem. Opioid overdose deaths in the New York City borough are 170 percent higher than the national average. While fentanyl is responsible for the majority of deaths, it's not the only substance to blame. Overprescribing opioids has contributed to the crisis, as well as the fact that addiction service providers have been spread too thin.

Joseph Conte knows about these issues all too well. He’s the executive director of the Staten Island Performing Provider System, a clinical and social service health-care network, and he leads the island’s efforts to address the crisis. Conte told The Daily Beast that the country’s public officials have only recently started putting their money where their mouths are.

“I think it's just these last two years or so, when we've reached 100,000 overdose deaths, that people are really starting to step back and say, ‘Hold on a second. This is one of the leading causes of death in America. Why aren't people trying to understand what is going on here?’” he said.

When Conte’s health-care network received $4 million in funding last November, it launched a program called “Hotspotting the Opioid Crisis.” Researchers from the MIT Sloan School of Management were enlisted to develop and implement an algorithm that predicts who in the system is at risk of an opioid overdose. The Performing Provider System will then send lists with names and contact information to relevant providers for targeted outreach—that way, an individual’s physician, case worker, or someone else involved in their care can call them up and re-engage them in the health system. Ultimately, the program is supposed to address at-risk users before the worst happens.

“After an overdose happens, tragic events ensue,” Conte said. “I think it would be really exciting to see if we can develop a way to intervene before someone has an overdose.”

Early signs suggest that it’s working. Through data that has not been publicly released yet, The Daily Beast learned the program cut hospital admissions in half for the more than 400 people enrolled, and reduced their emergency room use by 67 percent.

As health records have evolved to allow clinicians access to vast quantities of patient data in time with advances in processing power, predictive modeling has gained traction as a potential way to predict and prevent opioid overdose deaths. The beauty of these algorithms lies in their ability to synthesize large data sets, recognize hidden trends, and make actionable predictions—capabilities that are desperately needed for an issue as complex and varied in cause as the opioid crisis. But most of these models have thus far existed exclusively in closed experiments, measured against retrospective data rather than applied to prospective, real-world decision-making.

In contrast, Staten Island’s program is one of the first of its kind to apply a predictive machine learning algorithm to opioid overdose in a way that directly affects patient care. Other communities are close behind, increasingly looking to AI as a tool to prioritize outreach and support in an overburdened health care system. However, experts are still grappling with key issues, like validating the models, reducing bias, and safeguarding patients’ data privacy. These researchers stress that more work needs to be done to make sure that the most harmful tendencies of AI don’t rear their ugly digital heads. In other words: Are the models ready, and are we ready for them?

Machine learning algorithms like the ones being used by Conte’s organization are trained using historical data sets that often include hundreds of thousands of patient insurance claims, death records, and electronic health records. The past data gives them a sense of how to predict certain future outcomes. And unlike traditional computer models, these algorithms can process vast amounts of data to make informed forecasts.

Staten Island’s program relies on health records, death records, and insurance claims to “learn” about an individual’s demographics, diagnoses, and prescriptions. The model trains on more than a hundred of these variables to predict future likelihood of an overdose. In preliminary testing, Conte’s team and MIT Sloan researchers found their model was highly accurate at predicting overdoses and fatal overdoses, even with delays in the data of up to 180 days. Their analysis showed that just 1 percent of the population analyzed represented nearly 70 percent of opioid harm and overdose events—suggesting that targeting that fraction of individuals could greatly reduce these outcomes.

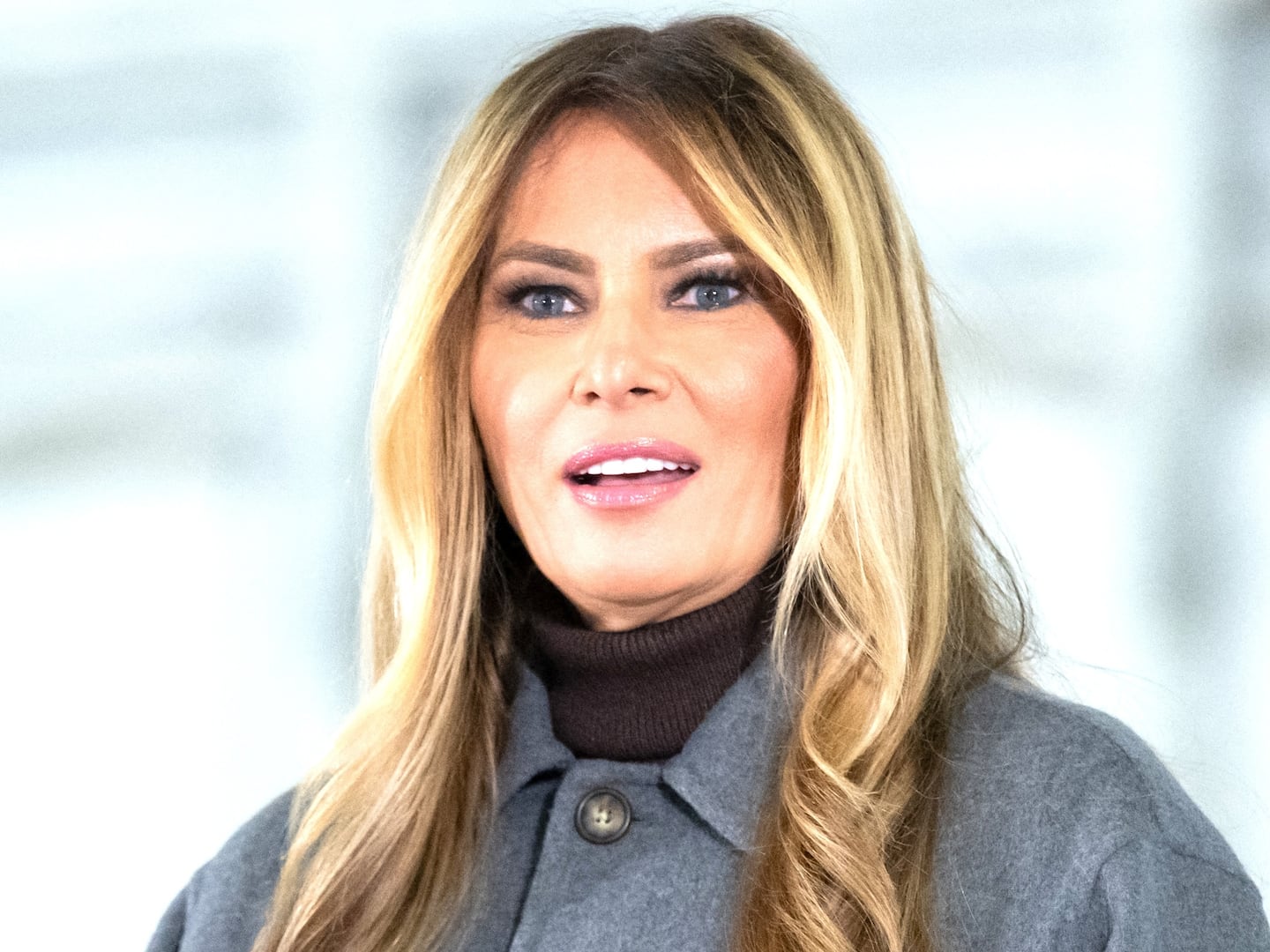

To Max Rose, a former U.S. Representative for New York and now a senior adviser to the Secure Future Project, which funded “Hotspotting the Opioid Crisis,” these results demonstrate that the project can and should be a national model.

“I don't think that there's any community that wouldn't benefit from the utilization of predictive analytics, so long as the data is not just created to be admired,” Rose told The Daily Beast. “This really needs to be scaled so that we can finally address this problem that's killing over 100,000 people a year.”

But these promising results could be blunted by one of AI’s most notorious weaknesses: a tendency to exacerbate disparities.

There’s already a nasty precedent in health care for risk scores in the form of prescription drug monitoring programs, or PDMPs, which are predictive surveillance platforms funded by law enforcement agencies. Algorithms determine patients’ risks for prescription drug misuse or overdose, which then influence how doctors treat them. But research suggests that PDMPs spit out inflated scores for women, racial minorities, uninsured people, and people with complex conditions.

“[PDMPs] are very controversial, because they didn't really do a good job on the validation,” Wei-Hsuan ‘Jenny’ Lo-Ciganic, a pharmaceutical health services researcher at the University of Florida who works on creating and validating AI models to predict overdose risk, told The Daily Beast.

Lo-Ciganic’s research aims to make sure that predictive algorithms for opioid overdoses don’t fall into the same trap. Her recent papers have focused on validating and refining an algorithm to verify that a single one can be applied to multiple states, and that an individual’s risk does not vary greatly over time.

Validation is critical for a tool that can impact patient care, or else inaccurate predictions can jeopardize an entire program, said Jim Samuel, an information and artificial intelligence scientist at Rutgers University.

“Even if you misclassify just one person, I think that would be a huge violation of that person's rights,” Samuel told The Daily Beast. Much of the data used to train these algorithms qualifies as protected health information, which is governed by the HIPAA Privacy Rule. The rule gives Americans a legal safeguard to their health data, including controlling and limiting how the information is used.

On top of these protections, those in charge of programming predictive algorithms need to ensure that an individual’s data is truly anonymized, said Samuel. “We don't want to get into a situation where we are tracking and monitoring people and invading their privacy. At the same time, we want to find that right balance whereby we are able to help the neediest segments.”

Patients should have the option of removing their data from an algorithm’s training set, Samuel said—even if it means rerunning a model, which can take several days.

Bias also poses a major problem to predictive algorithms generally, and these AI models are no exception. Algorithms can adopt the same kind of prejudicial and unjust behaviors that plague humans if data sets are missing a certain segment of the population. Furthermore, the predictions of an algorithm based on health-care data are only useful for people who have records in the health-care system—nonwhite people are more likely than white people to lack health insurance, a key barrier to seeking care.

There’s also the secret sauce of the algorithm itself—the information that a programmer teaches the model to include does not encompass all of the data it could be analyzing. It turns out that picking and choosing can present big problems if we’re not careful.

“Health care is data-rich,” Conte said. “The great part about it is this enormous amount of information. The difficult part about it is you have to put your arms around it, so that you take all variables into consideration. Because if you don’t, that’s where the bias comes in.”

He added that including homelessness and housing insecurity proved to be a key variable that increased the accuracy of the algorithms’ predictions. This means that AI models that train on different data sources or exclude that variable may inadvertently introduce bias.

But inadvertent is not synonymous with harmless. “It’s not the machine’s fault” but rather the programmers’ when an algorithm is trained on biased data, Lo-Ciganic said. “I think it’s our responsibility before implementation to try to minimize the biases.”