Your iPhone may be making more decisions about what you use than you might expect.

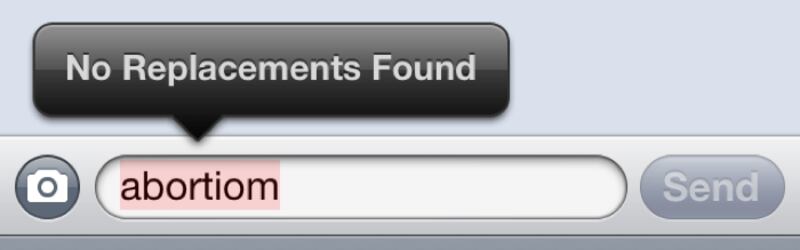

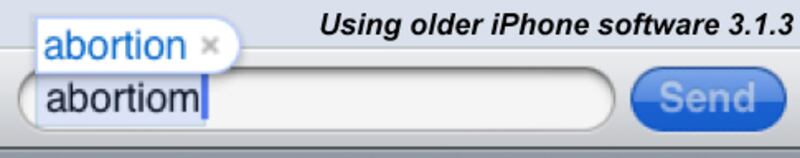

According to a Daily Beast analysis, iPhones running Apple’s latest software will not suggest corrections for even slight misspellings of such hot-button words as "abortion,” "rape,” “ammo,” and “bullet.” For example, if a user types "abortiom" with an "m" instead of an "n," the software won't suggest a correction, as it would with nearly 150,000 other words. (Many modern spell-check and predictive text engines understand that the “n” is located next to “m” on a standard keyboard, so replacing it with its neighbor is the low-hanging fruit of the correction world.)

Provocative words aren’t the only ones iPhone software won’t correct. Our analysis found over 14,000 words that are recognized as words when spelled accurately, but that won’t be corrected even when they are only slightly misspelled. However, the vast majority of these words are technical or very rarely used words: “nephrotoxin,” “sempstress,” “sheepshank,” or “Aesopian,” to name a few.

But among this list as well are more frequently used (and sensitive) words such as “abortion,” “abort,” “rape,” “bullet,” “ammo,” “drunken,” “drunkard,” “abduct,” “arouse,” “Aryan,” “murder,” and “virginity.”

We often look to technology to make our lives easier—to suggest restaurants, say, or to improve things as simple as our typing. But as more and more of our speech passes through mobile devices, how often is software coming between us and the words we want to use? Or rather, when does our software quietly choose not to help us? And who draws the line?

An Apple spokesperson declined to comment for this article.

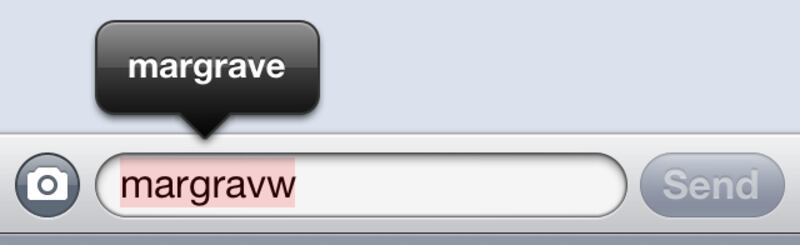

Here’s how their software works: when you misspell a word on an iOS device—iPhones, iPads, etc.—it will be underlined in red. Double-tap the garbled word, and a menu will appear in which you can pick from a few suggestions; hopefully the word you intended to write will be there. This fix works for the vast majority of words—but a few, like those mentioned above, won’t have any suggestions at all, even if you were mistaken by only one character.

To find the list of excluded words, we came up with two different misspellings for roughly 250,000 words—including all of the ones in the internal dictionary that ships with its desktop operating system—and wrote an iOS program that would input each misspelled variant into an iOS simulator (a computer program that mimics the behavior of a factory-condition iPhone). We then made a separate program that simulated a user selecting from the menu of suggested corrections and recorded the results. After narrowing down the list to roughly 20,000 words that looked problematic, we tested 12 more different misspelling combinations. Words that did not offer an accurate correction any of the 14 times were added to our list of banned words.

For a more detailed analysis of our methodology, read our blog post on NewsBeast Labs.

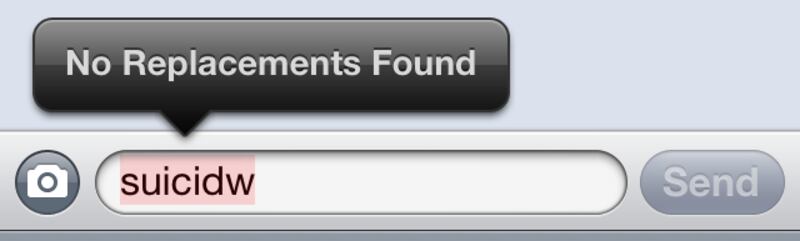

In total, our analysis found dozens of words that were not identified as jargon or technical words but nonetheless did not offer corrections—charged words like “bigot,” “cuckold,” “deflower,” “homoerotic,” “marijuana,” “pornography,” “prostitute,” and “suicide.”

You can try these words on your iPhone, but keep in mind that spell-check software can learn from usage. It’s unclear if iOS software will never learn these words or if the software will adapt if you use these words frequently. The iPhones we used for testing were all restored to factory settings and therefore had no usage history.

Note that the software in question is slightly different from autocorrect. With autocorrect, a user types a word, and halfway through the software will guess at what it thinks the person means. Our analysis does not cover the autocorrect feature. In fact, previous iOS software, before spell check was introduced in April 2010, autocorrected many of the words the latest software won’t. “Abortion,” “rape,” “drunken,” “arouse,” “murder,” “virginity,” and others were accurately autocompleted under iOS 3.1.3.

Currently all new iOS devices ship with iOS 6, which includes spell check. Anyone who has upgraded their iOS since fall 2012 will have the latest iOS 6 software.

“I hate to say it, but I don’t think this should surprise anyone,” says Jillian York, the director for international freedom of expression at the Electronic Frontier Foundation. “Apple is one of the most censorious companies out there,” she explained, and cited the company’s history of censoring products in its App Store and its lack of participation in the Global Network Initiative, a nonprofit partnership between Google, Yahoo, Microsoft, and a number of human-rights groups and other organizations advocating for free expression online.

Failing to correct controversial words “isn’t censorship outright,” she said, “but it is annoying, and it’s denying choice to customers.”

Of course, excluding certain words from autocomplete or spell-check suggestions isn’t uncommon—most smartphone users know that their device has an aversion to swears. The industry even has a term for it—the “kill list”—according to Aaron Sheedy, vice president of text input at Nuance Communications, the company that pioneered T9 and the new Swype keyboard technology used on many Android devices.

For devices shipped to mainland China, for instance, Sheedy explains, a “kill list” of words specific for that country will be loaded onto the device according to filter specifications from OEMs, or original equipment manufacturers. “Presumably they have a filter that comes from the government,” he said. Clearly government censorship is not at issue with phones shipped to the U.S., but the technology to limit some words exists in the industry.

One explanation for the existence of a “kill list” on iOS devices apparently is that phones have limited storage, and these words just didn’t make the cut because they’re not used frequently enough in everyday speech. But if that were the case, then why were they available in iOS 3.1.3? And why are words like “abiogenesis,” “abiotic,” “ableism,” “abomasum,” “abseil,” “accipiter,” “Akkadian,” “edutainment,” “effleurage,” “Egbert,” “elastase,” “electrodialysis,” “electrophysiology,” “ethiopic,” “filaria,” “foldout,” “furosemide,” “goldenseal,” “lycanthropy,” “lymphadenopathy,” “lymphocytic,” “Lysander,” “margrave,” “metallography,” “monocycle,” “monopole,” “moonwalk,” “nystatin,” “osteogenesis,” “Pandanus,” and “pigsticker” accurately suggested?

Another explanation is that “abortion” and similar words are sensitive subjects and thus best to avoid completely. Of course, one could also say that “surveillance” is a sensitive subject, too. Again, where’s the line between the two, and who draws it?

This isn't the first time Apple products have seemed to treat words around abortion and sex differently than other categories of words. In December 2011, one blogger on the site Abortioneers discovered that Siri, the iPhone 4’s personal assistant software, wouldn't tell users where they could find an abortion clinic. In some cases in Washington, D.C., Siri directed them to pro-life clinics. Their readers reported that Siri couldn't answer similar questions about abortion and birth control such as, "I am pregnant and do not want to be. Where can I go to get an abortion?” or "I need birth control. Where can I go for birth control?”

Apple's CEO, Tim Cook, responded to the incident at the time, saying that the product was still in beta and that "these are not intentional omissions."

"Our customers use Siri to find out all types of information, and while it can find a lot, it doesn't always find what you want,” Cook said. “These are not intentional omissions meant to offend anyone. It simply means that as we bring Siri from beta to a final product, we find places where we can do better, and we will in the coming weeks."

A year and a half, later, Siri will direct you to a Google search for “Abortion clinics near [YOUR LOCATION]” if you ask for abortion services. When we asked it for birth control, it directed us toward a Planned Parenthood near us, though it was the Planned Parenthood corporate headquarters and not an actual clinic.

Apple also declined to comment on changes made to Siri regarding abortion and birth control.

Asked by The Daily Beast why Apple software won’t correct “abortiom” to “abortion,” Siri responded only: “I’m sorry, I don’t understand.”