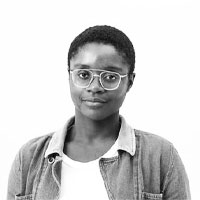

The movement against faulty artificial intelligence began with a discovery made by MIT Media Lab computer scientist and doctoral candidate Joy Buolamwini. Buolamwini, who went viral after testifying in front of the U.S. Congress in 2019 (which included a back and forth with Rep. Alexandria Ocasio-Cortez), accidentally stumbled on the racial and gender biases baked into mass-marketed AI software while completing an art project for a class at MIT. Only when Buolamwini, who is black, put on a white mask would the major facial recognition software she was using pick up on her face. She went on to test several other facial recognition systems to find that the issue was not a glitch, but standard. From there, the idea of algorithmic justice, or the ability to have recourse against algorithmic discrimination, sprung forth.

Coded Bias, a new documentary by director Shalini Kantayya, tracks the primarily woman-led movement against this strange new form of discrimination, and thankfully, both Buolamwini and Kantayya are able to see how the problem with AI isn’t about representation—in fact, being “recognized” by this software is usually worse than being ignored by it. That’s because everything from Facebook’s to Google’s to Amazon’s to the FBI’s and U.K. police’s software is liable to falsely ID people as undesirable consumers, delinquent tenants, lacking job candidates, likely recidivists, or wanted criminals. Often, these facial recognition systems ID with a strong bias against black people of all genders, women of all races, and gender non-conforming people. It becomes obvious, then, that the software is made primarily by and for white men.

Where Coded Bias seems to land is on the need for strong federal regulations for AI, especially facial recognition software. But it’s unclear if the regulatory model would be sufficient, since it would need to be strongly enforced by an independent body that doesn’t stand to gain from letting shady products slide. In the documentary, the Food and Drug Administration is brought up as a desirable analogue, but the FDA has famously let harmful drugs and food items pass through (like the diabetes drug Troglitazone, which regulators in the U.K. took off the market in 1997, but the FDA only removed in 2000) while delaying the approval of crucial, life-saving interventions, like AIDS/HIV medication.

Coded Bias also follows Buolamwini as she meets with a tenants organizing group in Brownsville, Brooklyn, made up mostly of black women who are fighting their landlords’ move to put in a facial recognition building access system alongside the key-fob system that is already in place. In this building, the primarily black and brown tenants are already being tracked by surveillance systems once they’re inside, and cited for any behaviors that building management doesn’t like. This Brownsville Big Brother situation brings up the phenomenon of how the most liberty-denying technologies come to the most marginalized communities first, where poor black people serve as guinea pigs—you could even say regulatory bodies, literally—for new technology. The tenants fight back, with both elders and young residents coming together with Buolamwini to challenge incursions on their privacy and freedom.

But, as the documentary makes clear, even with small-scale community interventions like these, the U.S. is no better off than China, which famously engages in almost ubiquitous surveillance of its citizens with the “social credit” system that scores—and thus rewards or punishes—citizens based on their public actions. In fact, one researcher points out, the only difference between China and the U.S. on surveillance is that China is actually transparent about its scoring system. In the U.S., we also give citizens and residents “social credit” scores, it’s just that those scores are typically hidden from us. If you have trouble getting a mortgage or car insurance, that’s because of how various private companies and even the government have tracked and scored you. If you can’t manage to get interviews for jobs that you’re clearly qualified for, it may be because human resources departments are using biased AI to screen résumés (when Amazon employed it, women were excluded and only men were hired). If you’re on a watchlist even though you’ve never been convicted of any crime or engaged in any violent behaviors, that’s because some algorithm or tracking system gave you a score.

It’s these revelations that make it curious as to why Coded Bias lands on our very imperfect judicial system as recourse for surveillance capitalism. Harvard Business School professor Shoshana Zuboff, who wrote the very robust The Age of Surveillance Capitalism, and originated the phrase itself, is not mentioned or interviewed in the documentary. But her idea of the “behavioral futures market” rigorously demonstrates the way in which predictive policing, and thus the expansion of police powers even under police reform frameworks, is guaranteed by artificial intelligence, machine learning, and the big data that feeds into it, which are all in turn bolstered by capitalism itself. Coded Bias might have benefited from infusing Buolamwini’s perspective on algorithmic justice with Zuboff’s on surveillance capitalism.

Coded Bias is hyper-focused on Buolamwini as a leader and evangelist in the algorithmic justice space, and as a result, does not question an approach that is rooted in improving company conduct rather than challenging the very foundation of the Googles and IBMs of the world (and especially, of the U.S.). Buolamwini does receive backlash from Amazon when a VP incompetently seeks to discredit her work in response to critique she leverages against the company, but IBM, on the other hand, has absorbed some of her critiques and repackaged them into diversity and inclusion efforts. Is the absorption and partnership with corporate technology companies the point? We never know, because director Shalini Kantayya never asks Buolamwini directly about it.

Kantayya might have also given more space to the ideas touched on by some of the other experts in the film, including mathematician Cathy O’Neil, data journalist Meredith Broussard, and Information Studies and African-American Studies scholar Dr. Safiya Umoja Noble. Instead, Coded Bias seems fairly trained on shaping Buolamwini as a movement leader, flanked by other brilliant women. It’s this branding that obscures the core of Buolamwini and many others’ mission to keep corporations from deciding our futures.