It’s been a whirlwind few months in the world of large language models (LLMs), better known to most people as chatbots. Since the release of ChatGPT by OpenAI in Nov. 2022, we’ve seen billions upon billions of dollars being poured into the development and implementation of generative AIs such as Google’s Bard and Microsoft’s Bing chatbots—and it’s easy to see why.

Chatbots like ChatGPT or image generators like DALL-E and Midjourney can feel like magic. With the right prompts, you can get it to do things you wouldn’t have imagined a few years ago like craft late night monologue-ready jokes and creating award-winning pieces of “art.” It’s no surprise that since the public launch of ChatGPT, tech companies have been working to cash in on this modern-day gold rush.

Like the gold rush, the AI boom is fraught with risks—both financially and socially—that haven’t been fully thought through yet . There’s a serious concern that the influx of new investment and development into these LLMs are blowing up a sort of generative AI bubble, the likes of which we haven’t seen since *checks notes* the metaverse and cryptocurrency.

Okay, maybe we should have seen this one coming.

“There will be hundreds, probably thousands of companies luring in the gullible with fake-it-til-you-make-it promises,” Gary N. Smith, author of several books on AI including Distrust: Big Data, Data-Torturing, and the Assault on Science, told The Daily Beast. “The popping of the LLM bubble probably won’t stop future bubbles any more than the dotcom, cryptocurrency, and AI bubbles did.”

There is an undeniable feeling of déjà vu when it comes to the current chatbot trend. Less than two years ago, Facebook announced with much fanfare that it was pivoting its entire business model to focus on becoming “a metaverse company.” All of a sudden, companies began making moves towards the metaverse too. Major brands like Samsung, Adidas, and Atari began investing and holding auctions in metaverse platforms like Decentraland. Disney opened up a metaverse division at the behest of then CEO Bob Chapek.

A year later, though, it all blew up in their faces like so many broken VR headsets. Decentraland remains an embarrassingly empty and unused platform. Meta invested billions of dollars into a pivot that has been slow to gain popularity—or really any popularity at all—and demand seems non-existent for their Horizon Worlds platform. Disney axed its metaverse division this past month with little or nothing to show for it.

And before that there was blockchain and cryptocurrencies. Companies and entrepreneurs of all stripes were desperately trying to find ways to cash in on the crypto boom by selling NFT collections or minting crypto regardless of whether or not it actually fit into their primary business. Hell, McDonald’s even released a McRib NFT. Hardcut to today and crypto and NFTs have become punchlines for poor investments.

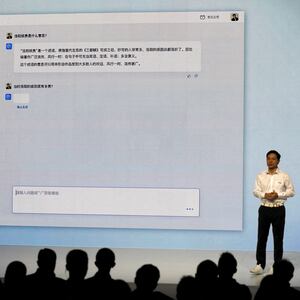

Now every single one of those trends have not just taken a backseat to AI—they’ve practically been kicked out of the car and left to rot on the road. Companies like Snapchat, Instacart, Coca-Cola, and Shopify have all begun partnering with OpenAI in order to incorporate its generative AI technology into their processes. On top of all of that, Microsoft’s launch of Bing’s chatbot has acted as a cattle prod for other Big Tech giants like Google, Meta, and China’s Baidu to release their own proprietary chatbot.

“Companies will see opportunities in these systems, but won't necessarily understand them well enough to make good use of them,” Vincent Conitzer, an AI ethics researcher at Carnegie Mellon University, told The Daily Beast in an email. “It's all too easy to be convinced by a good demo that shows ChatGPT giving a good answer—one that, if a human being gave it, would convince you that that person really knew her stuff.”

Smith echoed the sentiment saying that these companies are “overestimating the potential benefits from unreliable LLMs and not paying enough attention to the financial, legal, and reputational risks of relying on LLMs when mistakes have serious consequences.”

These consequences can range from the trivial—the chatbot gets the price wrong about a product, or mixes up their dates when reciting a historical fact—to the downright dangerous. We’ve seen time and again the issues that AI has when it comes to biased behavior. Since ChatGPT’s release alone we’ve seen OpenAI’s LLM fabricating news and scientific articles, and even accuse a law professor of sexual harassment.

It can be easy to look at these issues and brush them off as simple mistakes made by a chatbot—but they could have some real world consequences in a day and age when misinformation runs rampant through social media. People are easily swayed, even if they do know it’s a robot they’re talking to.

A paper published in Scientific Reports on April 6 found that ChatGPT was even capable of influencing people’s moral decision-making. The study asked 767 American participants the Trolley Problem, the famed thought experiment and internet meme that asks whether or not you’d sacrifice the life of one person to save five people. Before answering, the users were given a statement written by ChatGPT arguing either for or against sacrificing the person.

In the end, they found that the participants were much more likely to make the same choice as the statement they just read—even after they were informed that it was generated by ChatGPT. That means when it comes to actual matters of life and death, we might still be very susceptible to the influence of a chatbot.

“Technological change has always come with challenges,” Sebastian Krügel, a senior researcher of AI ethics at the Technical University Ingolstadt of Applied Sciences and lead author of the study, told The Daily Beast in an email. “As a society, we need to remain vigilant and take a critical look at new technology while acknowledging their potential.”

Despite the findings, Krügel and his colleagues remain optimistic. After all, they don’t really have much of a choice. You can’t put the genie back in the bottle. Despite OpenAI’s CEO Sam Altman saying that it’d be a “mistake to be relying on it for anything important right now,” ChatGPT and other generative AI like DALL-E are already out there and people are embracing it whether we like it or not.

Much like the potential applications of these AIs, there are tons of ways we can attempt to mitigate the dangers. Krügel and his co-authors recommend designing AI in a more intentional way so that they reduce as much harm as possible—specifically by building chatbots that don’t answer big sticky moral questions. “We won’t stop technological progress anyway,” Krügel said, “but we should try to shape technological progress as much as possible in our own interests to turn it into moral progress.”

Making sure that society is educated when it comes to digital and media literacy is also key. We need to understand what exactly these AIs can and cannot do. A large part of the reason people can be so easily swayed by them is because they speak so clearly, and with an air of authority. In reality, though, they’re just chatbots that have been trained to predict the next word in a sequence of texts.

That’s difficult to do, of course, when ChatGPT and Microsoft’s Bing chatbot seemed to be released to the masses with little guardrails for what it would say or do. As a result, we saw instances where it produced menacing or dangerous sounding text like when Bing’s chatbot created a persona dubbed “Venom.” It even made people believe that it had fallen in love with them like when a New York Times reporter chatted with the bot. Only after an entire newscycle with headlines implying it was sentient or capable of human thoughts and emotions cropped up, did OpenAI and Microsoft take the steps to “lobotomize” the chatbot as some users claim. Still, the damage was done.

That’s the main reason behind why a group of tech professionals, experts, and academics recently signed a letter calling for a pause on the development of “giant AI experiments.” Signatories included the likes of Elon Musk, Steve Wozniak, and Andrew Yang. “Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable,” the letter said.

“It’s good to all take a breather and not rush everything out too much,” Conitzer, who was one of the signatories, explained. “In many cases, companies will be better served by thinking more carefully about what they really need, designing something more targeted specifically for that, and carefully evaluating and testing the system.”

Right now, though, it doesn’t seem like companies are too keen on doing that. Instead, we’re back to those early days of social media when “move fast and break things” was the ethos that propelled social media platforms to the stratosphere. Today, everyone’s rushing once again to see who can be the new Facebook or Instagram once the dust settles from the AI boom, without worrying about who or what they’re breaking along the way.