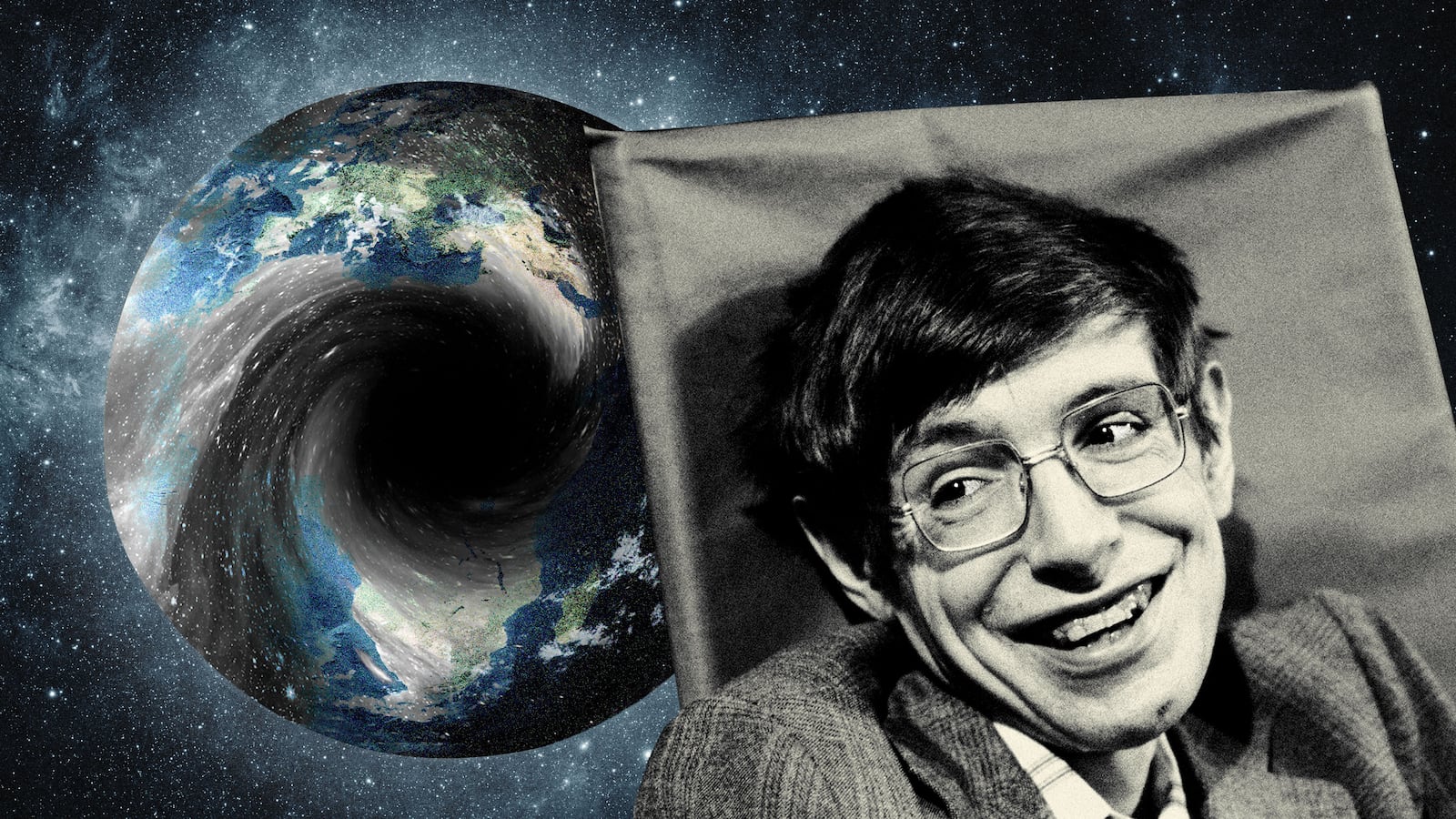

“I hope you’ll make black holes,” Stephen said with a broad smile. We exited the cargo lift that had taken us underground into the five-story cavern housing the ATLAS experiment at the CERN lab, the legendary European Organization for Nuclear Research near Geneva. CERN’s director general, Rolf Heuer, shuffled his feet uneasily. This was 2009, and someone had filed a lawsuit in the United States, concerned that CERN’s newly constructed Large Hadron Collider, the LHC, would produce black holes or another form of exotic matter that could destroy Earth.

The LHC is a ring-shaped particle accelerator that was built, principally, to create Higgs bosons, the missing link—at the time—in the Standard Model of particle physics. Constructed in a tunnel underneath the Swiss-French border, its total circumference is twenty-seven kilometers (almost seventeen miles), and it accelerates protons and antiprotons1 running in counter rotating beams in its circular vacuum tubes to 99.9999991 percent of the speed of light. At three locations along the ring, the beams of accelerated particles can be directed into highly energetic collisions, recreating conditions comparable to those reigning in the universe a small fraction of a second after the hot big bang, when the temperature was more than a million billion degrees. The tracks of the spray of particles created in these violent head-on collisions are picked up by millions of sensors stacked like mini–Lego blocks to make up giant detectors, including the ATLAS detector and the Compact Muon Solenoid, or CMS.

The lawsuit was soon to be dismissed on the grounds that “speculative fear of future harm does not constitute an injury in fact sufficient to confer standing.” In November of that year the LHC was successfully turned on—after an explosion at an earlier attempt—and the ATLAS and CMS detectors soon found traces of Higgs bosons in the debris of the particle collisions. But, so far, the LHC hasn’t made black holes.

Why wasn’t it entirely unreasonable though for Stephen—and Heuer too, I think—to hope that it might be possible to produce black holes at the LHC? We usually think of black holes as the collapsed remnants of massive stars. This is too limited a view, however, for anything can become a black hole if squeezed into a sufficiently small volume. Even a single proton–antiproton pair accelerated to nearly the speed of light and smashed together in a powerful particle accelerator would form a black hole if the collision concentrated enough energy into a small enough volume. It would be a tiny black hole, for sure, with a fleeting existence, for it would instantly evaporate through the emission of Hawking radiation.

At the same time, if Stephen and Heuer’s hope to produce black holes had come true, it would have signaled the end of particle physicists’ decades-old quest to explore nature at ever shorter distances by colliding particles with ever increasing energies. Particle colliders are like microscopes, but gravity appears to set a fundamental limit to their resolution, because it triggers the formation of a black hole whenever we increase the energy too much trying to peek into an ever smaller volume. At that point, adding even more energy would produce a bigger black hole instead of further increasing the collider’s magnifying power. Curiously, therefore, gravity and black holes completely reverse the usual thinking in physics that higher energies probe shorter distances. The endpoint of the construction of ever larger accelerators doesn’t appear to be a smallest fundamental building block—the ultimate dream of every reductionist—but an emergent macroscopic curved spacetime. Looping short distances back to long distances, gravity makes a mockery of the deeply entrenched idea that the architecture of physical reality is a neat system of nested scales that we can peel off one by one to arrive at a fundamental smallest constituent. Gravity—and therefore spacetime itself—seems to possess an anti-reductionist element, a difficult-to-grasp but important idea. So at what microscopic scale does particle physics without gravity transmute into particle physics with gravity? (Or, put differently, how much would it cost to fulfill Stephen’s dream of producing black holes?) This is a question that has to do with the unification of all forces, the topic of this chapter. The search for a unified framework that encompassed all basic laws of nature was already Einstein’s dream. It bears directly on whether multiverse cosmology really has the potential to offer an alternative perspective on our universe’s life-encouraging design. For only an understanding of how all particles and forces fit harmoniously together can yield further insights in the uniqueness—or lack thereof—of the fundamental physical laws, and hence at what level one can expect them to vary across the multiverse.

Most visible matter is made of atoms that consist of electrons and a tiny nucleus, which itself is a conglomerate of protons and neutrons. Atomic nuclei are held together by the strong nuclear force that acts on quarks, the constituent particles of protons and neutrons. The strong force is strong, but it has an extremely short range, dropping to zero sharply beyond distances of about a ten-trillionth of a centimeter. The second nuclear force, the weak force, acts both on quarks and on a second class of matter particles that includes electrons and neutrinos known collectively as leptons. The weak force is responsible for the transmutation of some nuclear particles into others. For example, an isolated neutron is unstable and will decay after some minutes into a proton and two leptons in a process mediated by the weak nuclear force. The third and final particle force, the electromagnetic force, is the most familiar. Unlike the strong and weak nuclear forces, electromagnetism, like gravity, has a very long range. It operates not only on atomic and molecular scales, binding electrons to atomic nuclei and atoms in molecules, but acts across macroscopic distances as well. So not surprisingly, together with gravity, electromagnetism is responsible for most everyday phenomena and applications, from communication devices and MRI scanners to rainbows and the northern lights.

All visible matter and the three particle forces that govern its interactions are bundled together in a tight theoretical framework: the Standard Model of particle physics. Developed in the 1960s and early 1970s, the Standard Model is a quantum theory that describes matter particles as well as forces in terms of fields, the undulating substances spread out in space that we have encountered before. According to the Standard Model, matter particles like electrons and quarks are nothing but local excitations of extended fields. Particle-like excitations of the force fields that act between matter particles are known as exchange particles or bosons. Photons, for example, the exchange particles mediating the electromagnetic force, are the individual particle-like quanta of the electromagnetic force field.

Its theoretical underpinnings in terms of quantum fields profoundly shape how the Standard Model conceives the microscopic workings of the particle world. Take the interaction between two electrons. When two electrons approach each other they deflect and scatter because like electric charges repel. The Standard Model describes this process in a tangible manner in terms of the exchange of a photon between the two electrons. When two electrons enter each other’s sphere of influence, it says, one electron emits a photon and the other absorbs it. As part of this exchange both electrons experience a little kick, which puts them on diverging trajectories. But that is not all. Feynman’s sum-over-histories formulation of quantum mechanics stipulates that one must add all possible ways one or more photons can be exchanged between the two electrons in order to compute their net scattering angle. This multiplicity of exchange histories means that one can’t quite pin down exactly where and when the interaction actually happened, a manifestation of Heisenberg’s uncertainty principle.

Now, while photons are massless, just like gravitons conveying gravity, the bosons responsible for the weak and strong nuclear forces are very heavy. This is why the nuclear forces are short-range forces that operate only on the microscopic scales of atomic nuclei. In general, the bigger the mass of the exchange particle, the smaller the range of the force it conveys. It is the masslessness of their microscopic quanta that makes electromagnetism and gravity reach across the universe.

So is this it for the Standard Model? Not quite! There is one last particle, the notoriously elusive Higgs boson, named after the British theoretical physicist Peter Higgs, who postulated its existence in 1964. The Higgs boson is the particle-like quantum of the Higgs field, an invisible scalar field that, much like the inflation field in the early universe, is thought to permeate all of space, a bit like a modern variant of the ether. The Higgs field is the crucial piece of the Standard Model that gives all other elementary particles their masses. Electrons and quarks, and even the exchange particles, have no intrinsic mass in the Standard Model theory but acquire their masses from the resistance they experience as they move through the all-pervading Higgs field. It is as if particles are constantly wading through the mud when they move about, and the resulting drag is what we call mass. The amount of mass that particles end up with depends on how strongly they feel the Higgs field. Quarks interact very strongly with the Higgs field and are heavy, whereas the lighter electrons do so much more weakly, and photons, which don’t interact with it at all, remain massless.

The idea of a scalar field that would endow other particles with mass was first proposed by the timid Higgs and independently by a more flamboyant duo, the American Robert Brout and the Belgian François Englert. The particle-like excitation of the field became known in Belgium as the Brout-Englert-Higgs boson, and as the Higgs boson elsewhere. It forms the capstone of the Standard Model and was finally found with the LHC nearly fifty years later, in 2012, in a discovery that counts as a real triumph of a long and deep symbiosis of curiosity-driven science, advanced engineering, and international cooperation. Much like the discovery of dark energy in cosmology, the experimental discovery of the Brout-Englert-Higgs boson shows once again that empty space is not empty but filled with invisible fields, one of which is responsible for the mass of the matter that makes up almost everything we encounter in daily life. It also demonstrates that nature really does make use of scalar fields as one of the key ingredients it has at its disposal to shape the physical world. As such, the discovery of the Brout-Englert-Higgs boson lends credence to the existence of a similar field that could have driven inflation in the very early universe.

It takes something like the LHC to create Higgs bosons because the Higgs field strongly interacts not only with other particles but also with itself, bestowing its own particle-like quantum with a large mass, m. By Einstein’s E = mc2 this means that it requires a great deal of energy, E, to excite the all-pervading Higgs field sufficiently strongly so that it pinches off, ever so briefly, a single, crackling quantum. As a matter of fact, the LHC succeeds in creating Higgs bosons in only about one in ten billion particle collisions. And these Higgs bosons enjoy but the briefest glimpse of existence, decaying almost instantly into a shower of lighter particles. Nevertheless, by carefully scanning through its decay products, particle physicists have been able to deduce some of the Higgs boson’s properties, including the fact that it weighs about as much as 130 protons put together. This may sound heavy, but most particle physicists find this incredibly light. In fact, the Higgs boson’s mass is a hundred million billionth of what many physicists would consider a natural value. Its value became even more puzzling in 2016 when, despite a major upgrade, the LHC didn’t conjure up any of the new elementary particles that theorists had hypothesized to render the tiny Higgs mass somewhat easier to swallow. A lightweight Higgs is important, however, for if the Higgs were much heavier, protons and neutrons would be heavier, too, far too heavy to form atoms. The unbearable lightness of the Higgs is yet another property that makes our universe propitiously fit for life.

Now, the Standard Model doesn’t quite predict the values of the masses of particle species, including that of the Higgs. This is because the theory on its own doesn’t fix the strength with which each type of particle interacts with the Higgs field. All in all, the model contains about twenty parameters, key numbers such as particle masses and force strengths, whose often surprising values are not predetermined by the theory but must be measured experimentally and inserted into the formulas by hand. Physicists usually refer to these parameters as constants of nature, because they appear to be unchanging across much of the observable universe. With these constants in place, the theory offers an extraordinarily successful description of all we know about how visible matter behaves. In fact, by now the Standard Model is by far the best-tested physical theory ever. Some of its predictions have been verified to an accuracy of no fewer than fourteen decimal places!

Yet you might wonder if there isn’t a deeper, yet-to-be-discovered principle that determines the parameter values on which the immense successes of the Standard Model rely. The Higgs mass may appear unnaturally small to us, based on what we know, but is its value perhaps implied by higher mathematical truths? Or perhaps the constants aren’t really the same constant numbers all across the universe. Perhaps they very slowly evolve, as part of cosmological evolution. Or maybe they change from one cosmic region to another, giving rise to island universes with non-Standard Models of particle physics?

Excerpted from ON THE ORIGIN OF TIME. Copyright © 2023 by Thomas Hertog. Published by Bantam, an imprint of Penguin Random House.