In 2005, Will Smith starred in a remake of The Matrix. It made a minor box office splash before quietly fading away into obscurity. You might have remembered it—which would be strange because I just made this movie up.

And yet, just by reading those two sentences, there’s a solid chance that a good number of you believed it. Not only that, but some of you might have even thought to yourself, “Yeah, I do remember Will Smith starring in a remake of The Matrix,” even though that movie never existed.

This is a false memory, a psychological phenomenon where you recall things that have never happened. If the above story wasn’t enough to trick you, don’t worry: You probably have plenty of false memories going back to your childhood. That’s not a knock on you—it’s just human. We tell ourselves stories and sometimes those stories get told and re-told so often that it morphs into something that’s only a little like the original event.

Even small nudges can change your memory. In the 1970s, there was a study that found that if you asked a auto accident witness how fast a car smashed into another, they’ll remember it as going much quicker than it likely did.

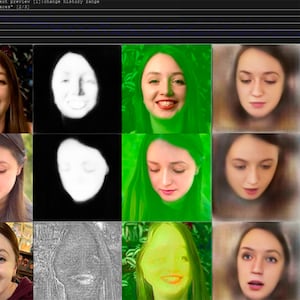

However, this very human phenomenon can also be easily weaponized against us to spread misinformation and cause real-world harm. With the proliferation of AI tools such as deepfake technology, there’s even fear that it could be used on a mass scale to manipulate elections and push false narratives.

That’s what’s at the heart of a study published July 6 in PLOS One that found that deepfaked clips of movies that don’t actually exist caused participants to falsely remember them. Some viewers even rated the fake remakes as being better than the originals—underscoring the alarming power of deepfake technology at manipulating memory.

However, there’s one silver lining: the study’s authors also found that simple text descriptions of fake remakes also effectively induced false memories in participants. On its own that sounds like a bad thing—and it is! But that finding suggests AI deepfakes may be no more effective at spreading misinformation than less technologically complex methods. What we end up with is a complicated picture of the harms that the tech could create—certainly to be feared, but also replete with its own limitations.

“We shouldn't jump to predictions of dystopian futures based on our fears around emerging technologies,” lead study author Gillian Murphy, a misinformation researcher at University College Cork in Ireland, told The Daily Beast. “Yes there are very real harms posed by deep fakes, but we should always gather evidence for those harms in the first instance, before rushing to solve problems we’ve just assumed might exist.”

For the study, the authors recruited a group of 436 people to view clips of various deepfaked videos and told that they were remakes of real movies. This included Brad Pitt and Angelina Jolie in The Shining, Chris Pratt in Indiana Jones, Charlize Theron in Captain Marvel, and—of course—Will Smith in The Matrix. Participants also watched clips from actual remakes including Carrie, Total Recall, and Charlie and the Chocolate Factory. Meanwhile, some of the participants were given a text description of the fake remake.

The researchers found that an average of 49 percent of participants believed that the deepfaked videos were real. Of this group, a good portion said the remake was better than the original. For example, 41 percent said the Captain Marvel remake was better than the original while 12 percent said The Matrix remake was better.

However, the findings showed that when participants were given a text description of the deepfake, it did as well and occasionally better than the video. This might suggest that tried-and-true means of misinformation and distorting reality such as fake news articles might be just as effective as using AI.

“Our findings are not especially concerning, as they don’t suggest any uniquely powerful threat of deepfakes over and above existing forms of misinformation,” Murphy explained. However, she added that the study only took a look at short-term memory. “It may be that deepfakes are a more powerful vehicle for spreading misinformation because for example they are more likely to go viral or are more memorable over the long-term.”

This speaks to a broader issue that lies at the bedrock of misinformation: motivated reasoning, or the way in which people allow their biases to perceive information. For example, if you believe that the 2020 election was stolen, you’re more likely going to believe a deepfaked video of someone stuffing ballot boxes than someone who believes that it wasn’t stolen.

“This is the big problem with disinformation,” Christopher Schwartz, a cybersecurity and disinformation researcher at Rochester Institute of Technology and who wasn’t involved in the study, told The Daily Beast. “More than the quality of information and the quality of sources is the problem that when people want something to be true, they will try to will it to be true.”

Motivated reasoning is a large part of the reason our current cultural and political landscape is the way it is, according to Schwartz. While humans might not necessarily be swayed by deepfakes or fake news, they might be more inclined to seek out articles and opinions that affirm their worldview. We trap ourselves into our own digital and social bubbles where we see and hear the same thoughts repeated, until we’re convinced that what we believe is the only thing that is true.

Such a climate becomes fertile ground, then, for something like an AI deepfake of Donald Trump being arrested or the Pentagon being set on fire to take roots and grow out of control. Sure, it might seem ridiculous—but when it affirms what we already believe to be true, our brain will make it as real as we need it to be.

Luckily, there is hope. Murphy said that the participants in the study largely agreed that the use of AI to deepfake characters in movies were worried about the potential for abuse towards the actors involved. This suggested that people might be largely AI and deepfake adverse, which could discourage future use of it in media.

“They did not want to watch a re-casted movie where the performers were not adequately compensated or did not consent to their inclusion in the project,” she said. “They viewed such a process as ‘inauthentic’ and ‘artistically bankrupt.’”

As for misinformation, Schwartz said the solution is in two of the most contentious issues of modern day American society: education and the media. First, people need to be both technologically literate enough to know how to identify a deepfake. Second, they need to be willing to challenge their assumptions when it comes to images, articles, or other media that affirm their beliefs. This can and should begin in classrooms where people can get an understanding of these issues early and often.

Likewise, newsrooms and media organizations have a duty to not only make the public aware of the dangers that AI deepfakes pose, but they also need to call out misinformation when it does occur.

“These articles raise awareness, which will have a sort of vaccination style effect on [AI misinformation],” Schwartz said. “People in general will be on guard, and they will know that they're being targeted.”

So consider this your warning now: We’re in an age where generative AI creating text, images, and videos are steadily exploding at an exponential rate. These tools will only become more powerful and commonplace with each passing day. They will be used against you and they have already been used against you.

It can be scary and it’s definitely confusing. But knowing that it exists is the first step to figuring out what’s true and what’s fake—and that might make all the difference.

“[We have] the tools we need to fight stuff like this,” Schwartz said. “Our ability to reason is God given. We need to use it and we can use it.”